AI Accelerationism Goes Global

Plus: EVs unplugged, Canada First, planetary defenses, Gaza ceasefire strain, Palantir’s influence game, the roots of outrage, and more!

—An asteroid headed in our general direction now has a 2.1 percent chance of hitting Planet Earth in 2032, according to the latest update from NASA, which a few weeks ago had put the chances at 1.3 percent. In hopes of avoiding such an encounter—which in the worst case could unleash 500 Hiroshima-level atomic bombs worth of force—the US, Europe, and now China are plotting anti-asteroid defenses (not in unison yet, but an Earthling can dream…).

—Shares in Chinese electric vehicle powerhouse BYD hit a record high after the company announced plans to put advanced self-driving capabilities in most of its fleet—and to partner with the now world-famous AI upstart DeepSeek to make that happen. Meanwhile, BMW promised to keep investing in gas vehicles as it braces for what one BMW board member says will be a “rollercoaster ride” in US EV demand under Trump 2.0. (More on US electric car troubles, and also on AI, below.)

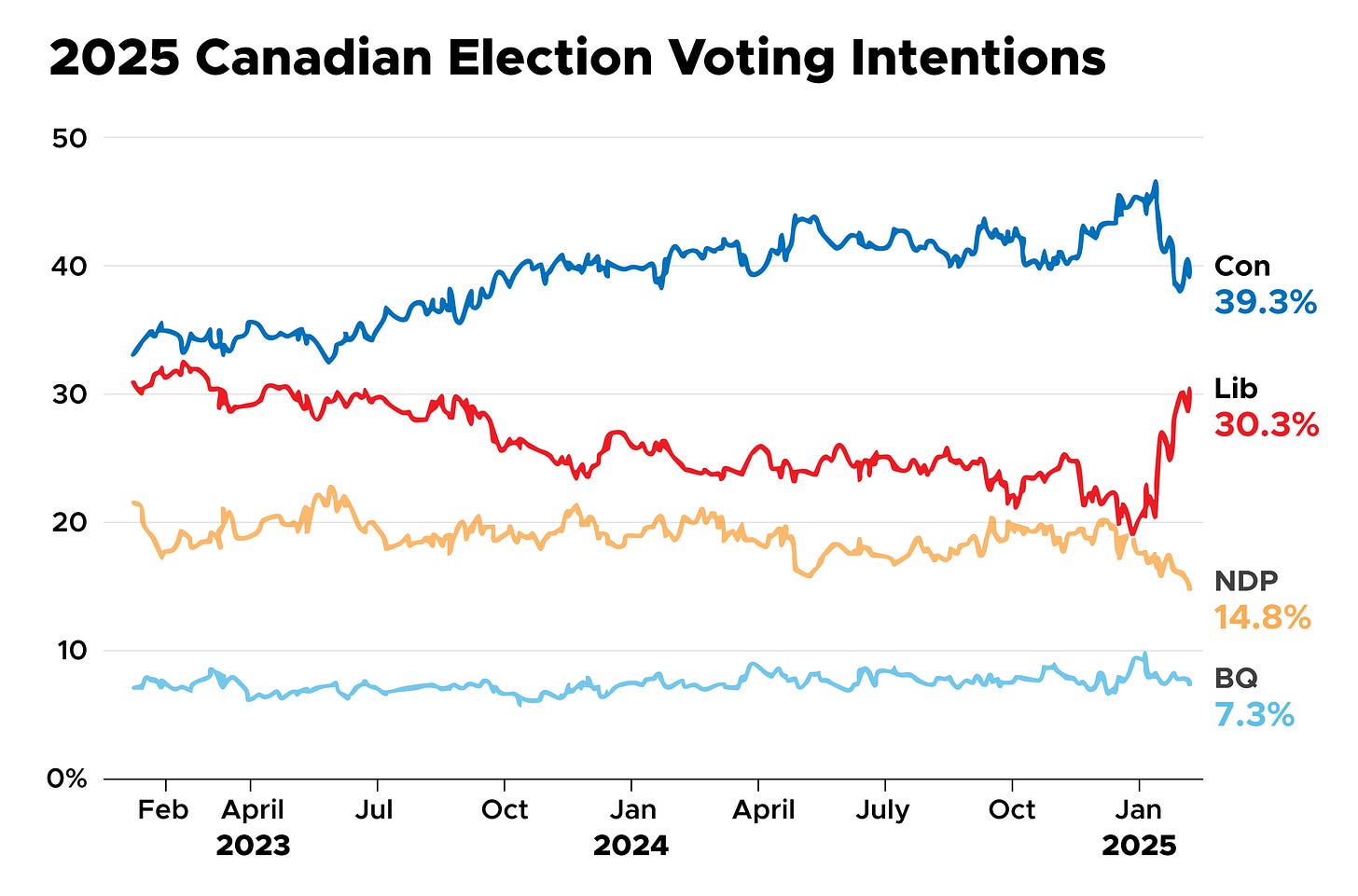

—President Trump has turned out to be bad news for a Canadian politician who was expected to ride the Trump wave—Pierre Poilievre, the Trumpesque (by Canadian standards) Conservative Party leader who hopes to be prime minister after this year’s elections. As the Guardian reports, tariff threats and talk of making Canada the “51st state” have triggered a rally-around-the-flag effect, which has helped propel the governing Liberal Party to its highest polling in two years at the expense of the now Trump-coded Conservatives.

—In UnHerd, leftist economist and former Greek finance minister Yanis Varoufakis makes the case that Trump’s aggressive use of tariffs could make America stronger in the long term. Varoufakis says Trump is trying to depreciate the dollar and end the currency’s “hegemonic status,” which could revitalize American manufacturing by making American products cheaper to foreign buyers. (For a less sanguine take on Trump’s tariffs, see last week’s edition of the Earthling.)

—This Monday marked a deadline under the Paris Agreement for nearly 200 countries to submit new targets for the reduction of carbon emissions, but only ten of them turned in their work on time. Among the countries that came through were Britain, Brazil, and Switzerland—and also the United States, which submitted its targets during the Biden administration but, under Trump, has withdrawn from the agreement.

—Following a phone call with Russian President Vladimir Putin, President Trump announced that the US and Russia are initiating talks to end the war in Ukraine. Many Democratic politicians, European officials, and mainstream media outlets characterized the move as a victory for Russia and a betrayal of Ukraine, while many advocates of US military restraint saw Trump’s decision as a long-needed acknowledgment that Kyiv cannot defeat Moscow on the battlefield. (See below for more on Trump’s attempts to end wars.)

This week at the Paris AI summit, Vice President JD Vance stood before heads of state and tech titans and said, “When conferences like this convene to discuss a cutting edge technology, oftentimes, I think, our response is to be too self-conscious, too risk-averse. But never have I encountered a breakthrough in tech that so clearly calls us to do precisely the opposite.”

Precisely the opposite of “too risk-averse” would seem to be “not risk-averse enough.” Or maybe, as both ChatGPT and Anthropic’s Claude said when asked for the opposite of “too risk-averse”: “too risk-seeking” or “reckless.” In any event, most people in the AI safety community would agree that such terms capture the Trump administration’s approach to AI regulation. And that includes people who generally share Trump’s and Vance’s laissez faire intuitions. AI researcher Rob Miles posted a video of Vance’s speech on X and commented, “It's so depressing that the one time when the government takes the right approach to an emerging technology, it's for basically the only technology where that's actually a terrible idea.”

The news for AI safety advocates gets worse: The summit’s overall vibe wasn’t all that different from Vance’s. The host, French President Emmanuel Macron, after announcing a big AI infrastructure investment, said that France is “back in the AI race” and that “Europe and France must accelerate their investments.” European Commission President Ursula von der Leyen vowed to “accelerate innovation” and “cut red tape” that now hobbles innovators. China and the US may be the world’s AI leaders, she granted, but “the AI race is far from being over.” All of this sat well with the corporate sector. As Axios reported, “A range of tech leaders, including Google CEO Sundar Pichai and Mistral CEO Arthur Mensch, used their speeches to push the acceleration mantra.”

Seems like only yesterday Sundar Pichai was emphasizing the need for international regulation, saying that AI, for all its benefits, holds great dangers. But, actually, that was back in 2023, when people like Open AI’s Sam Altman were also saying such things. That was the year world leaders convened in Britain’s Bletchley Park to discuss ways to collectively address AI risks, including catastrophic ones. The idea was to hold annual global summits on the international governance of AI. In theory, the Paris summit was the third of these (after the 2024 summit in Seoul). But you should always read the fine print: Whereas the official name of the first summit was “AI Safety Summit,” this year’s version was “AI Action Summit.” The headline over the Axios story was: "Don't miss out" replaces "doom is nigh" at Paris' AI summit.

The statement that came out of the summit did call for AI “safety” (along with “sustainable development, innovation,” and many other virtuous things). But there was no elaboration. Nothing, for example, about preventing people from using AIs to help make bioweapons—the kind of problem you’d think would call for international regulation, since pandemics don’t recognize national borders (and the kind of problem that some knowledgeable observers worry has been posed by OpenAI’s recently released Deep Research model).

MIT physicist Max Tegmark tweeted on Monday that a leaked draft of the summit statement seemed “optimized to antagonize both the US government (with focus on diversity, gender and disinformation) and the UK government (completely ignoring the scientific and political consensus around risks from smarter-than-human AI systems that was agreed at the Bletchley Park Summit).” And indeed, Britain and the US refused to sign the statement. The other 60 attending nations, including China, signed it.

Journalist Shakeel Hashim wrote about the world’s journey from Bletchley Park to Paris: “What was supposed to be a crucial forum for international cooperation has ended as a cautionary tale about how easily serious governance efforts can be derailed by national self-interest.” But, he said, the Paris Summit may have value “as a wake-up call. It has shown, definitively, that the current approach to AI governance is broken. The question now is whether we have time to fix it.”

Over the past decade, news outlets and social media platforms have earned a lot of money by provoking outrage—but why do people get so mad about politics, anyway? Neuroscientist Leor Zmigrod considers this question and others in a review of a new book, Outraged, by social psychologist Kurt Gray, who argues that outrage at its heart is a form of defensive anger in response to fears of potential harm. According to Gray, if we try to understand the fears of our political adversaries—and who or what they want to protect—then we’ll get along much better.

Gray also sets out to challenge three widespread notions: that humans evolved to be predators (no: we were mainly prey), that morality is built on a variety of foundations (no: protection from harm is at the heart of it all), and that facts persuade people (no: emotions and stories are what work). In order to combat polarization, Gray says people should share personal stories of suffering and seek to understand the roots of others’ fears in order to bridge divides.

Zmigrod has doubts about some of these ideas. “Moral intuitions are deeply sculpted by ideologies, control and power,” she writes, arguing that reducing them to one factor may cost us more insight than we gain. As for the idea of sharing hard-luck stories as opposed to statistics? “I confess that, to me, this post-factual, anecdote-trading world feels uneasy and somewhat frightening,” Zmigrod writes. “The market for exchanging narratives of victimhood—and dismissing evidence—is already saturated.”

For much of this week, the ceasefire in Gaza was teetering on the edge of collapse. Hamas, citing alleged Israeli violations of the truce agreement, threatened to delay the release of three hostages who were set to be freed on Saturday. Israeli Defense Minister Israel Katz, for his part, warned that Hamas will have “hell to pay” if it follows through on that threat, a warning bolstered by Israel’s decision to call up reservists in preparation for a potential return to war.

On Friday, Hamas appeared to pull back from the brink by announcing that it would go forward with the Saturday hostage release. If carried out, the move would likely delay a return to war in the short term. But for the ceasefire to become a lasting peace, policymakers will have to examine the roots of this week’s crisis. A closer look suggests that the biggest obstacle to an enduring ceasefire may well be the man who deserves most credit for securing the deal in the first place: President Trump.

The problems began on Feb. 1, when Trump first floated a plan to displace Palestinians from Gaza and force them to settle in Jordan or Egypt, a suggestion that legal scholars say would constitute a severe violation of international law. This idea dramatically reduced the incentives for Israel and, by extension, Hamas to comply with the ceasefire agreement.